AI

Click to Read Article

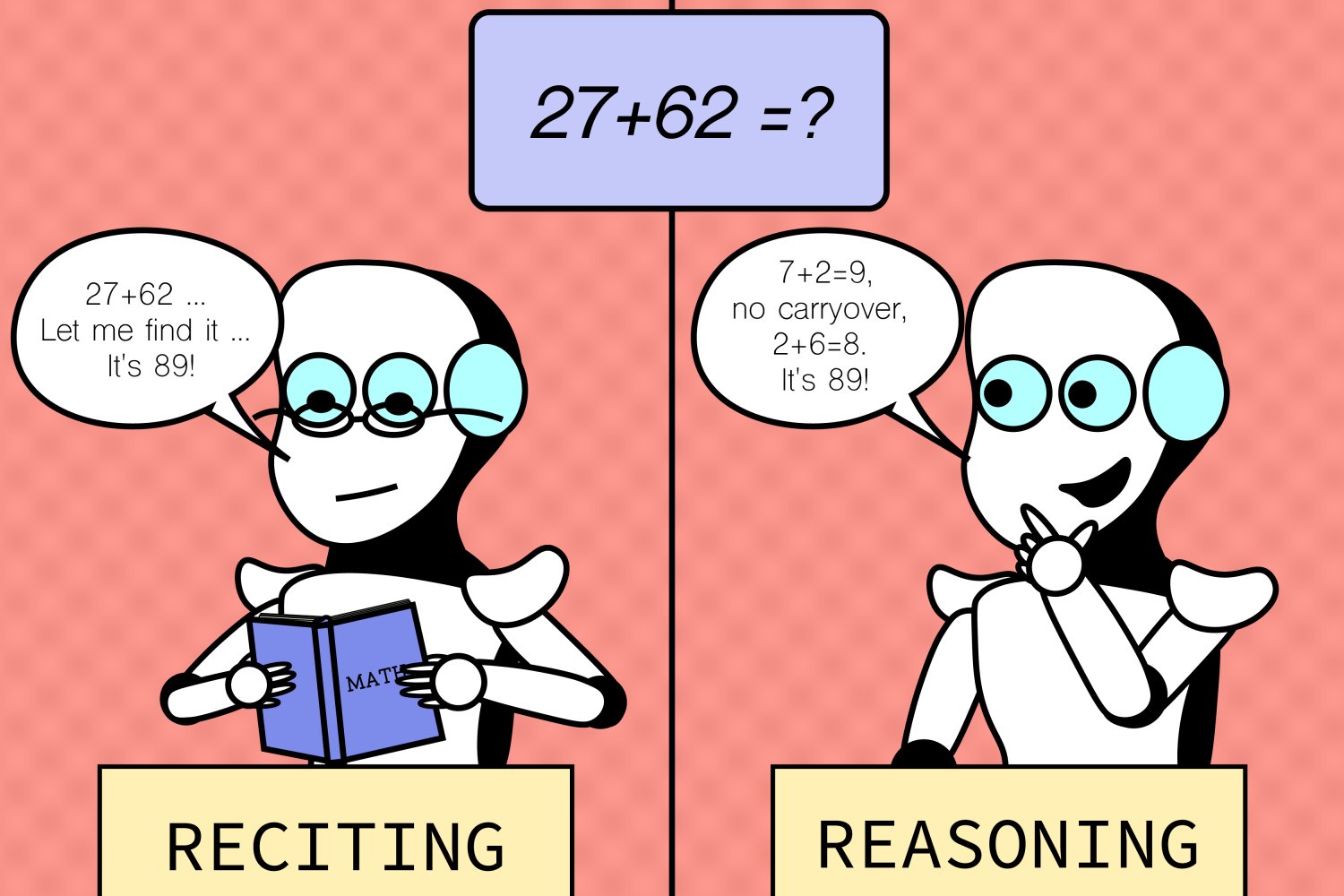

Is AI reciting or simply reasoning? This is a question that is at the forefront of many minds when first learning about the world of AI. It is likely our first thought as we are trying to belittle the power of AI to give us intellectual security. But does it have some root in truth? Despite it's motivation, asking this question is reasonable when considering how AI learns.

Memorizing Data

AI models are trained in two may ways called pre-training and post-training. Pre-training is done by exposing a neural network with arbitrary weights and biases to a vast amount of data. This data is commonly labelled which is called supervised learning. The model's weights and biases are adjusted via back-propogation which strengthens neural connections that gave a better answers. Many people consider this way of learning to be memorizing data which is recited when it is asked a question.

Learning like Humans

The pre-training process that trains models on data-sets, despite being a seemingly "computer" way to learn is actually very similar to how humans learn. Just as machine learning uses artificial neural networks, humans use biological neural networks to learn. Also similar to machine learning, humans neural netwrok are vastly complex and cannot be fully understood.